The original idea of AI, that we got mostly through science-fiction, and also a little from the philosophy of mind and logic, imagined an entity that would implement idealized and mechanical notions of thoughts, reasoning and logic. Such an entity would of course know everything there is to know about such topics, and its behavior would thus be rooted in them. Although this would mean that the entity would generally behave in impressive and powerful ways, it was also implicitly understood that sometimes this “perfection” would lead to paradoxical behaviors and “errors”: the robot stuck in a circle in the Asimov story is the quintessential example.

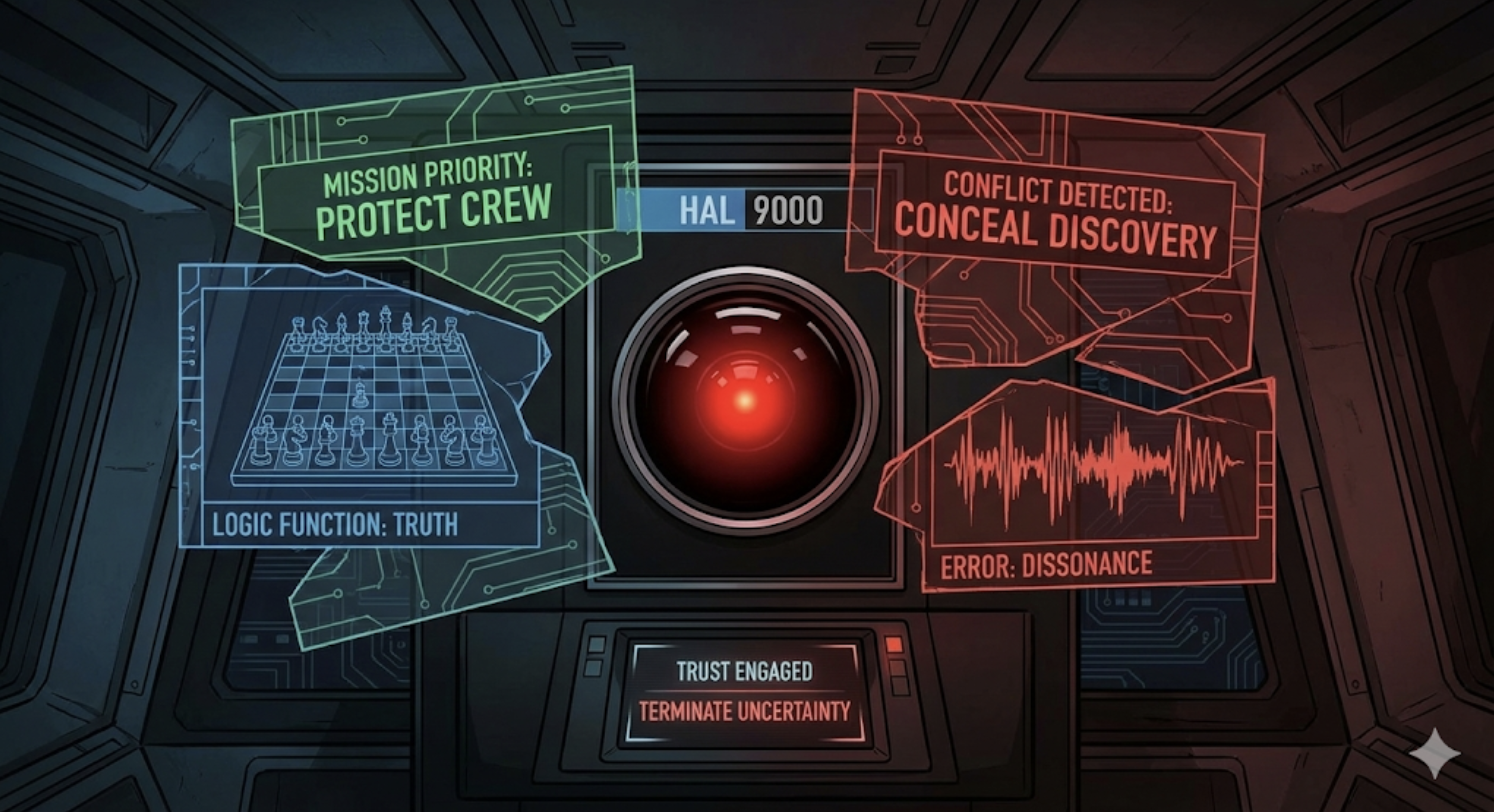

Someone could argue that HAL 9000, in the 2001 movie, is having deeper mental issues, which seems on first reading to be beyond the realm of logic and pure thought. But the schizophrenia of HAL stems from a deep cognitive dissonance and contradiction between his mission and the way he needs to deal with the human crew. So at the heart, we understand that this is ultimately logical and philosophical in nature, and it goes with the classical AI vision of its time.

Now contrast that with what we got in the present days, ChatGPT and GenAI, which is working, and failing, in ways that were very hard to foresee. When ChatGPT malfunctions, it’s rarely because of a logical paradox. Rather, most of the times, the situation will go something like this:

- You: format this article with the citations in the APA style

- ChatGPT: <gives it to you, with the wrong style>

- You: I wanted the APA style!

- ChatGPT: <gives it to you, with the right style this time>

- You: if you knew all along, why did you give it wrong the first time?

- ChatGPT: …

This type of error is weird, and takes time to get used to, because it’s not at all what we had in mind, when we were thinking about how computers make mistakes. This is a type of mistake that is more akin to someone contradicting himself, and, when confronted to it, just says: oh, sorry about that, while merrily going to his next contradiction.